Readiness survey design called into question

Photo credit/ Kelsey Van Horn

November 15, 2015

On Monday, Nov. 2, co-chairs of the Strategic Resource Allocation (SRA) Coordinating Committee, Joe Garvey, vice president for business affairs and treasurer, and Dr. Alan Levine, vice president of academic affairs, distributed a survey to faculty, staff, and administration via e-mail.

The survey is entitled “The Change Management Readiness Survey” and focused on Marywood’s willingness to accept change that would be brought about through the SRA process.

The e-mail stated, “As part of the SRA process, we are surveying the campus community to assess your perception of Marywood’s readiness for change.”

According to the e-mail, the survey was available from Nov. 2 until 4:30 p.m. on Friday, Nov. 6.

Levine said the survey was published in 2005 by HRD Press. According to Amazon’s website, the survey is written by SRA consultant Goldstein, Pat Sanaghan, president of the Sanaghan group, another consulting firm, and Avik Roy, a journalist and the opinion editor at Forbes.

According to Levine, the distribution of this survey is part of Goldstein’s reallocation process and has been used at many other colleges.

“The goal is to help us understand better our, or any organization’s, capacity for change,” said Levine. “Capacity for change is a critical piece and so it should give us a read on our capacity for change.”

When beginning the survey, participants were asked to select if they are part of faculty, administration, professional staff, or support staff. They were also asked to identify whether they were serving on one of the SRA committees–the coordinating committee, the facilitation team, the academic task force, or the support program task force.

The survey consisted of 50 statements that all related to university employees’ perceptions on change and included three statements at the end of the survey that rate the participant’s feelings toward the “change effort.”

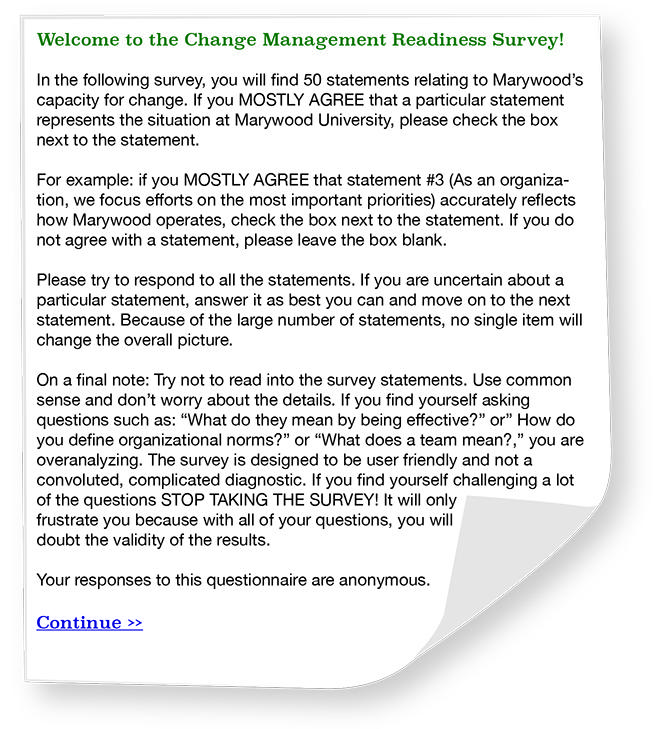

When filling out the survey, questions one to 50 had one corresponding box labeled “mostly agree” next to each statement. If participants did not “mostly agree,” they could respond by skipping the question entirely.

“If you mostly agree that a particular statement represents the situation at Marywood University, please check the box next to the statement,” stated the survey’s instructions. “If you do not mostly agree with a statement, please leave the box blank.”

The final three questions related to the change effort and could be rated on a scale from one to ten depending on if the participant felt “low” or “high” about a given question.

The email sent to participants of the survey included instructions regarding how to answer the survey questions.

“Please try to respond to all the statements,” the instructions read. “If you are uncertain about a particular statement, answer it as best you can and move on to the next statement. Because of the large number of statements, no single item will change the overall picture.”

The instructions said the responses to the questionnaire would be anonymous.

Lastly, the instructions stated: “Try not to read into the survey statements. Use common sense and don’t worry about the details. If you find yourself asking questions such as: ‘what do they mean by being effective?’ or ‘How do you define organizational norms?’ or ‘What does a team mean?,’ you are overanalyzing. The survey is designed to be user friendly and not a convoluted, complicated diagnostic. If you find yourself challenging a lot of the questions STOP TAKING THE SURVEY! It will only frustrate you because with all of your questions, you will doubt the validity of the results.”

Levine said the distribution of this survey was a part of Goldstein’s Strategic Resource Allocation plan from the very beginning.

“We just have hired Goldstein as a consultant and decided to give this survey as his consultancy suggests we should,” said Levine.

When asked about the validity of the survey if participants only have the “mostly agree” box to select, Levine stated, “It’s not even a disagree. Either you feel that, or you don’t.”

According to Levine, the survey is only meant to assess an organization’s capacity to change.

“I think that the intention of the survey is to assess the organization’s capacity for change and I think that the way this survey has been designed will allow us to do that with that one response,” said Levine.

Dr. Craig Johnson, math professor and Faculty Senate president, stated that he has never seen one option for the majority of questions on any other survey before.

“I think few people would disagree it’s a poorly constructed survey with oddly worded instructions,” said Johnson. “The idea of having a survey was a good one. It would be nice if it was a better tool.”

Dr. Ed O’Brien, professor of psychology and counseling, whose areas of expertise include designing surveys such as these, expressed stronger sentiments against the survey than Johnson did.

“I’ve been doing this for 35 or 40 years now,” O’Brien said, “and this is probably the worst survey that I’ve ever seen.”

O’Brien wrote a lengthy critique of the survey, highlighting seven criticisms he had with the survey, including the lack of any choice for users to pick besides “Mostly Agree.”

O’Brien said that, whenever any survey is taken to quantify opinions on a given topic, it must be assumed that there will be a variety of opinions to be expressed.

However, only a “Mostly Agree” option leaves out any potential opinion other than that.

According to O’Brien, this creates a major issue.

“Non-response is uninterpretable,” O’Brien said. “Non-response could be ‘I don’t have an opinion,’ it could be ‘I have a negative opinion,’ or that ‘I don’t understand the question.’ That’s why you have to ask, so you can make sense of a non-response…When they go to score this, I don’t know how they’re going to score it, because non-response is not a meaningful numeric response.”

Other issues addressed by O’Brien in his critique of the survey include the phrasing of the directions and the questions themselves.

O’Brien suggests that the directions that ask participants to “STOP TAKING THE SURVEY” if they find themselves “challenging a lot of the questions” are problematic.

“Directions for the survey were biased and were likely to suppress any expression of concerns about the campus leadership or climate,” O’Brien wrote in his critique.

O’Brien also suggests that questions are written in such a way that makes responding difficult, citing that the ambiguity of some questions.

O’Brien specifically cites question number 8, which asks survey-takers if they “Mostly Agree” with the statement “We have the discipline to successfully accomplish tasks on a daily basis,” mentioning five potential different meanings to this question. This ambiguity makes the question, and others like it, difficult to answer meaningfully.

When asked if this survey and the problems he addressed with it would skew the results unfairly in a positive manner for the university, O’Brien answered simply.

“It has to.”

Goldstein could not be reached for comment.

Contact the writers:

[email protected] (@PKernanTWW),